Share Via

Imagine driving on a sunny day with a dirty windshield. The sun’s rays reflect off the dust and dirt, creating a blinding glare that makes it hard to see the road ahead. You squint your eyes, adjust your visor, and try to ignore the discomfort, but you know you are risking a crash. This is what it feels like when it is time to refresh your network. You are stuck with outdated or unsupported routers, switches, and/or access points that creates a technical glare.

To fix this technical glare, network engineers begin establishing a plan to reinvigorate their network. Yes, the thrilling process of refreshing the network – every network engineer’s wildest dream come true. We eagerly countdown the days until we can undertake this riveting ritual that fills our souls with pure, unrestrained euphoria. Truly, network refreshes are the lifeblood that courses through a network engineer’s veins, bestowing purpose, and nirvana upon our otherwise drab existence. A higher calling if there ever was one.

But in reality, network refreshes are far from euphoric experiences. They are nothing more than critical maintenance tasks that help ensure network performance, security, and compatibility with new technologies. While certainly not the most glamorous part of the job, they are essential for preventing major outages and downtime which can be incredibly costly for businesses. Before we discuss the refresh process, let’s take a deeper look at a couple common reasons why we consider a network refresh using traditional thinking and frameworks:

- Budget cycles and the availability of funds for capital projects:

Sometimes we may have a fixed budget for network upgrades that we need to use within a certain period, such as a fiscal year or quarter. If we don’t use that budget, we may lose it, or have it reduced in the future. Therefore, we may decide to refresh our network equipment when we have the budget available even if our existing equipment is still functional. - Age and condition of network infrastructure:

Within 3-4 months of installing new hardware, new software and fixes/patches will become available. Staying on top of upgrades or fixes that are relevant to the configurations and protocols we’ve deployed is time-consuming. Often, our hardware falls behind in capabilities relatively quickly after its deployment. Vendors typically stop selling (end-of-sale) network equipment within a few years and stop supporting it (end-of-support) a few years after that, giving the complete lifespan of the hardware a limited window. Once the equipment reaches a certain age – let’s call it ‘ancient’ – it may become increasingly difficult and costly to maintain and repair, as spare parts and technical support could be scarce or unavailable. Additionally, the aging gear may be more prone to failures, malfunctions, and security vulnerabilities, which can cause network downtime, data loss, and user dissatisfaction.

The traditional network refresh process

We begin our network refresh journey by introducing our network team. In more ways than one, they are just like the postal service with a never-ending influx of “deliveries.” Just as the postal service must handle an endless stream of letters and packages arriving daily, the network team faces a never-ending barrage of service requests, troubleshooting tickets, and project demands constantly coming their way. Most of the enterprise campus network is outdated (some of it could legally drink if it were a person), and our team has sent a request for funding to refresh as many switches and access points as possible. If the users view Internet access as more important than water, then the infrastructure should be just as important, right?

Day -1

OK, the network team has received the green light to upgrade the network. Budget has been set aside, however it’s obviously not enough to refresh our entire campus network. This is common, so we’ll have to incrementally upgrade the network across multiple capital projects by selecting only a handful of buildings to upgrade. This can be done in one of a few ways: by prioritizing the buildings with the highest network demand and usage, most strategic value, and potential to the business, or lowest network upgrade cost and complexity. For this upgrade, we’re selecting seven specific buildings because they’re running the oldest hardware, and the configurations are nowhere near optimal thanks to our predecessor who configured them ages ago (he’s long since retired, which makes it okay to blame him).

We’ve selected our seven buildings. So, what’s the next step? Should we take inventory of the equipment to get an accurate bill of materials and better understand costs? That might have been the case years ago. However, given the ubiquity of wireless access today, we start with port inventory. Why? A lot has changed since our last refresh (when flip phones were still a thing). Almost all the devices that we’ve provisioned to our employees are Wi-Fi capable; they don’t ship with a wired port. There are also potential cost savings when refreshing the network.

With some applications now available in the cloud, some of our business departments have transitioned to wireless laptops. This is because fewer applications require desktop power (as the critical applications are now cloud-hosted), thus freeing up at least half a switch. Something else to consider: does it make sense to connect a printer, which consumes very little traffic throughout the year, to a ~$100 switch port, or should we move the printer to Wi-Fi? Our environment has approximately 400 printers. Moving these low-traffic, mostly silent devices to Wi-Fi could reduce our total switch count.

We’ve surveyed our network closets and found that many of the ports were not active (i.e., not blinking). We don’t have a fancy network management system, so we checked the interface counters across all the ports via CLI to see which ports were not in use. We check the interface counters over a few weeks to ensure unused ports remain unused. After performing port inventory, we found that we could reduce the switch count by one or two switches per floor in each building.

The wireless access points (APs) are getting updated too as part of this network refresh (After all, they deserve to look their best). Our buildings have a simple layout – a long hallway with offices and conference rooms lining each side, like a fancy hotel without the overpriced mini-bars. Our wireless design, which was crafted in the golden age of dial-up modems, has APs mounted strategically along the hallway. Since this design still works like a charm (unlike that old ’90s website you made in college), we’re not about to mess with perfection. We’ll keep the AP layout as-is, untouched by human hands, like a priceless museum artifact. As for the AP count across our seven buildings, we’ll consult the ancient scroll known as ‘the inventory spreadsheet’ – a sacred tome passed down through generations of IT professionals.

Day 0

Armed with device counts, we’re ready to work with potential vendors. For this refresh, we’re going to investigate three different vendors, one of them being the incumbent vendor. We selected the other two potential vendors based on feedback from industry peers.

First, we engage with each vendor to share the current state of our network, our requirements, and how we think the network will need to support upcoming business initiatives. The vendors, in turn, share their stories around their hardware and software, and what we notice is that each of them has different architectures and ways to manage them (even our tried-and-true incumbent has switched things up). These architectures are like technology smoothie bowls – a blend of different protocols (e.g., BGP, VXLAN, MPLS, etc.) and management platforms (e.g., controller-based, cloud-based, or a hybrid of the two – with and without CLI access).

To make sure everything works seamlessly together, we’ll require, for now, a demonstration based on a few of our key use cases. Later, we’ll probably need to expand to a more detailed proof-of-concept including some training or enablement. After all, we’re not exactly experts (yet) on operating and maintaining networks that use these fancy overlay-based protocols. It’s like learning a new language – you can’t expect to be fluent overnight.

One trade-off that we’ll have to keep in mind is that it is inefficient to run two architectures; if we decide to deploy a new architecture for our lucky seven buildings, we’ll still need to keep the legacy system humming along until we can secure more budget for another capital project. It’s like having one foot in the past and one in the future – a bit of a technological balancing act that never ends.

Next, we’ll need to understand the budgetary cost for each vendor. To do this, we’ll need to figure out which hardware platform(s) could be a candidate for our environment.

We work with the vendors to understand their offerings:

| Vendor | Number of Different Access Switch Models | Number of Different Access Point Models |

|---|---|---|

| Vendor A (Incumbent) | >60 | >20 |

| Vendor B | >15 | >10 |

| Vendor C | >80 | >25 |

With our requirements, each vendor narrows down their product catalog to a few options of switch and access point models each. We would like to say that we make our selections based upon trade-offs, including price, performance, and features we think we might need in the future, however the truth is that the decision was mainly based upon the estimated costs of the equipment.

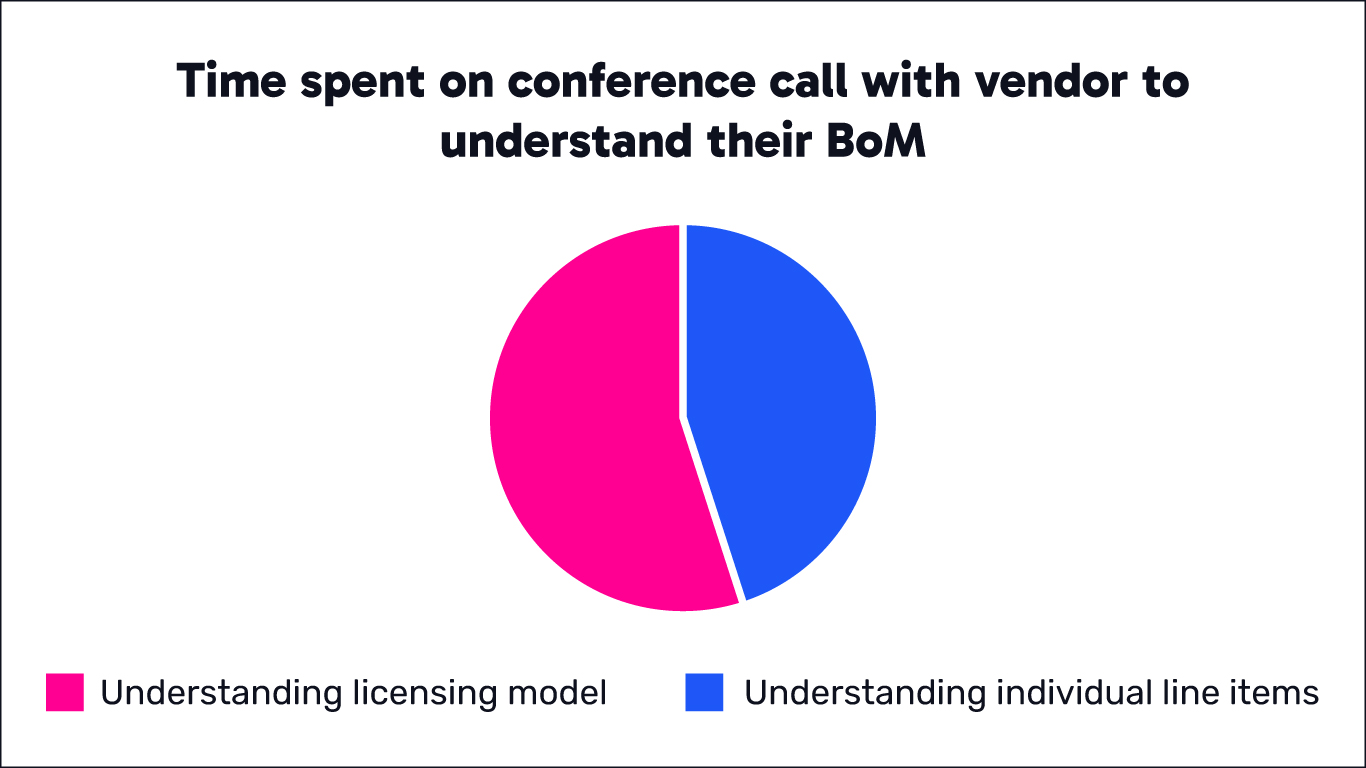

After almost a week of research and deliberation in figuring out which products we think would fit our environment, it’s time to get a better understanding of the final budget from each vendor so that we can compare costs and technical trade-offs. Each vendor has prepared a final bill of materials (BOM) that we review with them on a conference call. Here is a chart that shows how we spend that time with each vendor:

Look at that humble pie chart above. Yes, that’s right – the lion’s share of our one-hour vendor discussion is spent trying to wrap our heads around their licensing models (and that’s even true for our trusty incumbent vendor). It’s no exaggeration, this is the harsh reality we’re facing across all vendors these days as they introduce these newfangled subscription models for their software. We do spend a bit of time reviewing the hardware and software capabilities, as well as going over the nitty-gritty line items (verifying optic types and quantities, route table sizes, etc.). But let’s be real – that’s just the side to the main course of licensing madness. Maybe we should start a support group: ‘Licensing Anonymous’ has a nice ring to it.

After a decision is made as to which vendor we move forward with, we must tighten up the BOM. At a high-level, we have the correct quantities of switches, access points, and software licensing, so it now comes down to materials based on our migration. Here are a few examples:

- Do we have the correct quantity and types of SFPs? For this, we create a simple connectivity matrix or diagram.

- Do we have enough patch cables for each cable type (e.g., CAT6A multi-mode/single-mode fiber)

- Is there enough space in the rack for a parallel cutover with existing equipment? If not, will we need another rack in our MDF?

Although laborious, we must survey each MDF and IDF to ensure we have all the required materials, as we always schedule maintenance windows for off-hours on weekends. Finding out that we do not have the correct number of SFPs or cables that are long enough during the migration would set us back at least another week.

Day 1

Once the equipment arrives, we must stage it. Here is our high-level process for the switches:

- Unbox the switch

- Inventory the switch

- Assign a license to the switch

- Upgrade the switch

- Apply baseline configuration to the switch

- Pre-stack the switch

- Add switch to the RADIUS server as a client

The entire process for a single switch takes about an hour. For our seven buildings, we’ve ordered twenty-eight switches, and we’ll use four switches per stack, giving us a total of seven stacks (one stack per building). We can stage a few switches simultaneously, so the entire process takes around two days. We did make an effort to reduce the time it takes to stage the equipment by investigating a zero-touch deployment option, which consisted of minor modifications to our DHCP server that would serve a Python script to bootstrap each device. Ironically, that seemed like a lot of touches for a zero-touch process (like those ‘self-cleaning’ ovens that require you to spend hours scrubbing them before they’re clean).

With all the new network equipment staged and ready, the next big undertaking is the physical installation and cabling process across the seven buildings. Here are a couple of things we encounter during the intensive build-out stage (or, as we call it, the ‘grunt work’):

- Some of the network closets are old and lack proper airflow for cooling. These closets do have air conditioners installed; however, it looks like they’ve never been serviced, and they are blowing room-temperature air like a fan would. We make note of this to (hopefully) be addressed later and continue racking and stacking the gear.

- Most closets have little to no cable management. It seems our predecessors did not find it important to dress and label most of the cables. When installing the new equipment, we take the time to tediously ensure proper cable management and labeling, as it is very important for us to understand which device plugs into which switch.

After weeks of meticulous planning, staging equipment, and the arduous physical installation across seven buildings, the new network infrastructure is finally in place. The network engineers can take a moment to wipe the sweat and drywall dust from their brows before returning to work – you know – that easy desk job they signed up for. With the heavy lifting done, they’ll begin the riveting task of configuring and integrating all the new gear, diligently checking and testing every single link because nobody wants a hastily deployed network, right?

Once everything is precisely tuned and optimized to within an inch of its life, engineers can cut over to the new blazing-fast network that’s (almost) totally immune to the futuristic cyber-attack vectors those TV hacker guys use. Though an exhaustive process that likely took years off the network teams’ lives, the entire organizational network has been revitalized with up-to-date equipment providing faster speeds, improved security, and enough capacity to handle an all-staff 4K video call at any moment.

The Horror Story

The true horror of a network refresh is not in the grueling technical details or backbreaking labor itself, but in the weary acceptance that this byzantine process is simply par for the course. As we’ve laid out the meticulous staging, installation nightmares of cable dressing, vendor babysitting, translating the bill of materials into English, and the Sisyphean slope of configuration – most network engineers will likely nod along, thinking “yep, that’s just how it is.”

There is an inherent tragedy in how comprehensive IT lifecycles have been endlessly repeated in this Kafkaesque way, without the professionals meant to be on the cutting edge ever stepping back to question whether this admittedly soul-crushing experience must be the immutable cost of technological progress. It’s a subtle horror that millions of skilled workers have been inadvertently gaslit into believing the abusive relationship with convoluted legacy processes and unruly, outdated equipment is simply the brutal reality they signed on for.

A note for Senior Leadership (if they happen to have read this far)

No one is running out of college to do this type of work. Your teams should be focused on innovation, not maintenance. We will cover how we can achieve this in part 2 of this series, where we revisit this same scenario, however, as if we were in an alternate universe, we choose Nile to refresh our network.